Introduction

In the fast-evolving landscape of mobile computing, the convergence of edge devices and advanced image classification techniques holds immense promise. This blog documents a compelling project undertaken within the purview of CSE 535 Mobile Computing, spotlighting the seamless integration of server-side processes for image classification on edge devices. Through a meticulous blend of Android application development, convolutional neural networks (CNNs) and Server integration, this endeavor illuminates the transformative potential of server integration in enhancing mobile computing capabilities.

Project Overview

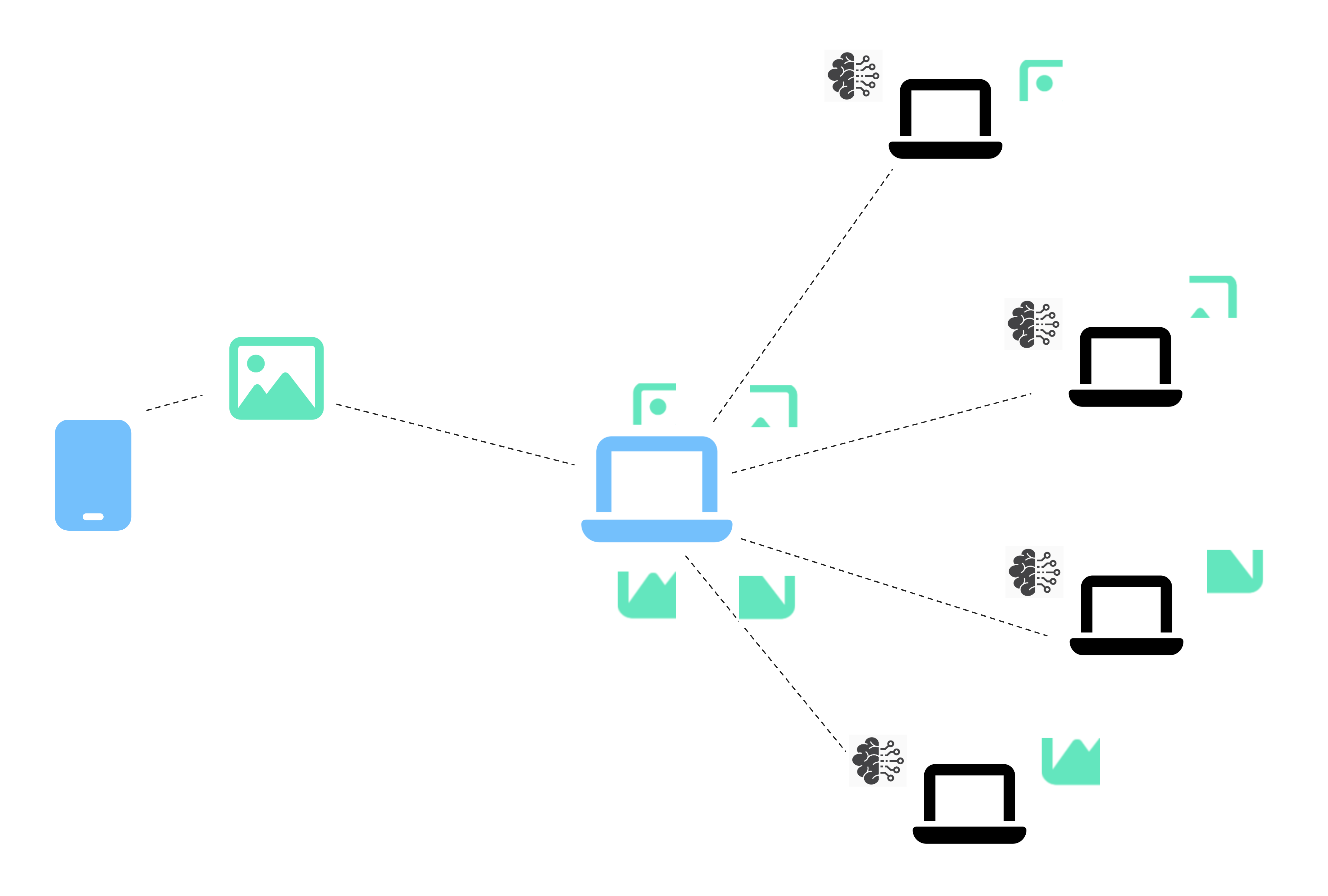

This project aims to utilize an Android application to divide captured images into parts for classification on edges devices like mobiles, laptops etc. Leveraging a CNN model trained on the MNIST dataset, the goal is to accurately categorize handwritten digits and organize them into respective folders based on their content.

Implementation Details

Building the Android Application

- MainActivity.java and ImageCaptureActivity.java formed the backbone of the application, augmented by XML layout files to craft a seamless user interface.

- Image division into four parts, facilitated by bitmap manipulation, enabled efficient processing and distribution to client edge devices.

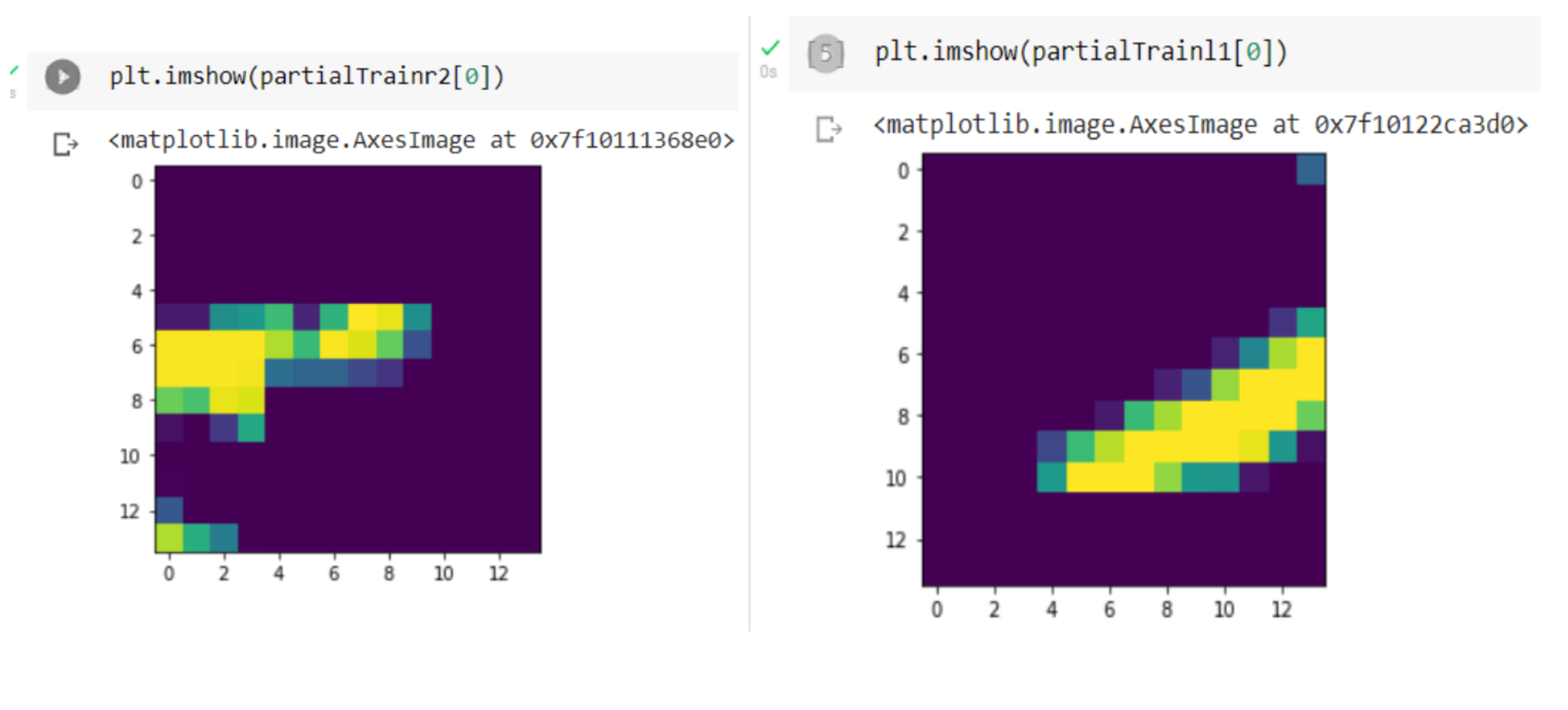

Dividing the Image

- Employing bitmap division techniques, captured images were sliced into manageable components for parallel processing.

- Each edge device, acting as a server, received a distinct image part for classification, optimizing resource utilization and minimizing latency.

Deep Learning Model

- A robust CNN model, with an accuracy of 80.34% on the MNIST dataset, has been employed for the image classification efforts.

- We hae used the CNN model with 1 input layer, 2 hidden layers and 1 output layer using activation function relu for hidden layers and softmax for output layer.

Server Integration

- Flask servers, equipped with CNN models, awaited image parts for classification, seamlessly interfacing with the main mobile application.

- Retrofit and OKHTTP3 libraries facilitated smooth communication between the mobile and server edge devices, ensuring swift data transmission and response retrieval.

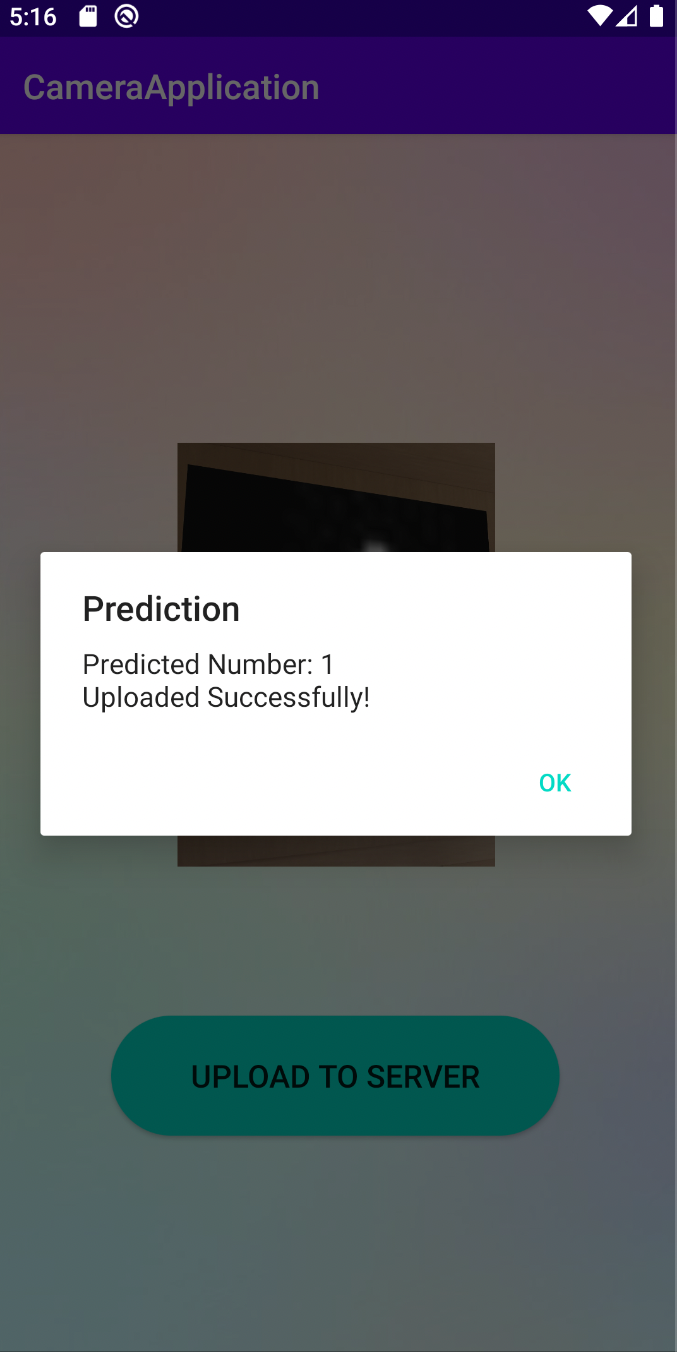

- Upon receiving predictions, the mobile application intelligently stored original images in designated folders based on classification confidence, exemplifying the project's end-to-end integration process.

Results

The following are the key aspects of the project that we have implemented and achieved:

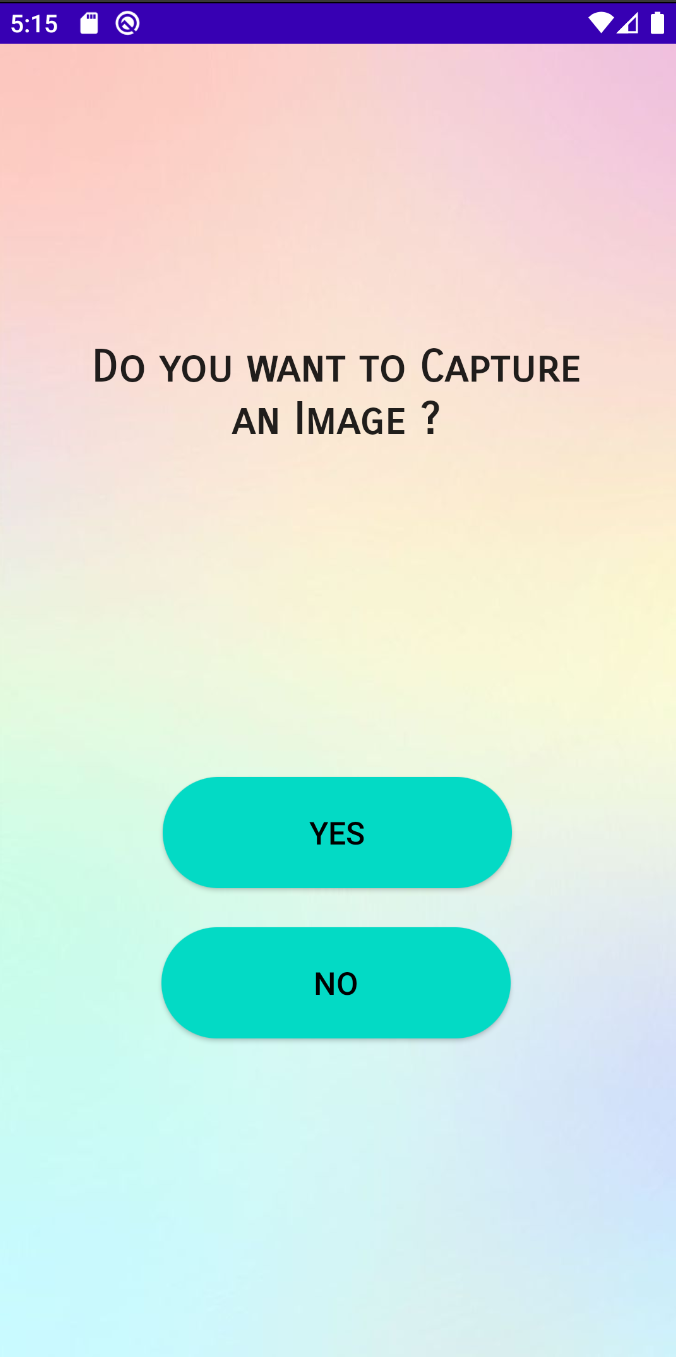

- Launching and navigation through the Android application's user interface.

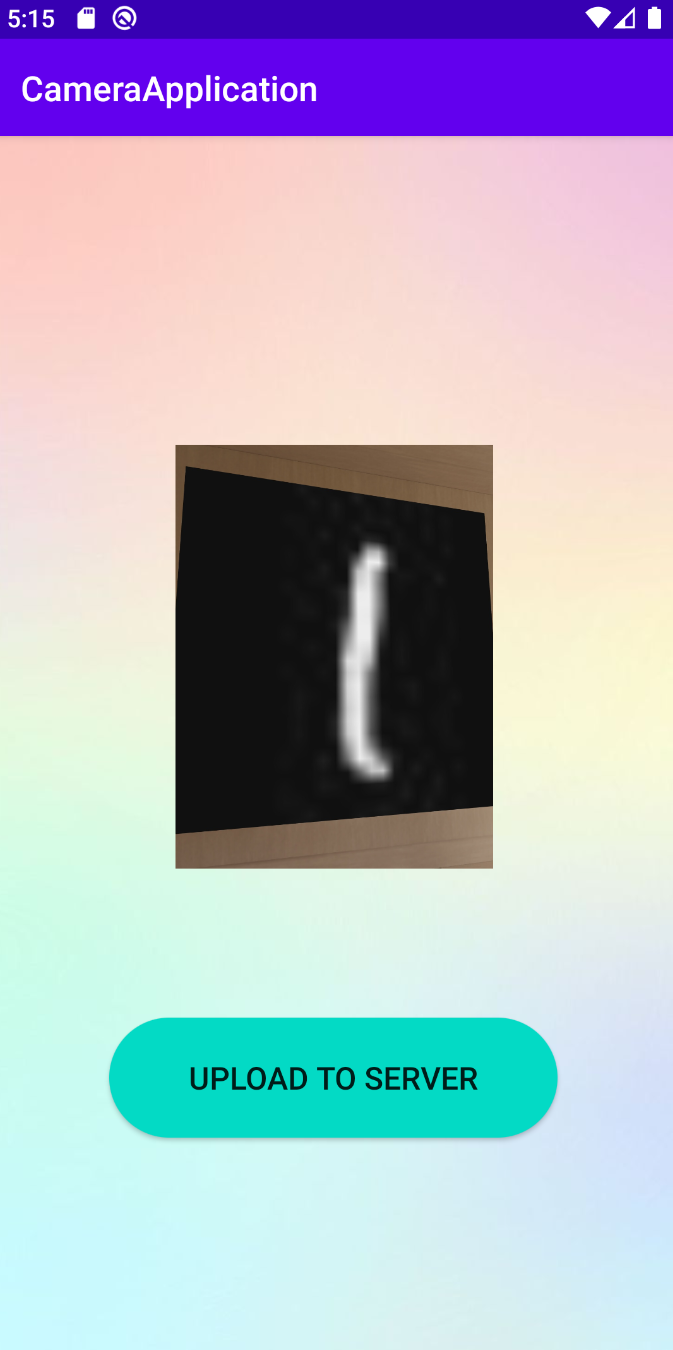

- Capturing images using the device's camera and initiating the image classification process.

- Seamless communication between the mobile application and server edge devices.

- Real-time classification of handwritten digits and organization into respective folders based on classification results received from offloaded servers.

Conclusion

In summary, the integration of server-side processes for image classification on edge devices represents a paradigm shift in mobile computing. By harnessing the collaborative power of Android application development, deep learning, and server integration, this project underscores the transformative potential of mobile computing in real-world applications.

Source Code

Explore the source code of this project on GitHub: GitHub Repository