This blog aims to guide you through the process of programming a Pololu robot to execute a specific set of actions: ascending a ramp, recognizing the summit, changing direction, and safely descending the ramp without the risk of falling off. We will delve into best practices for designing an embedded system, breaking down the process into modeling, designing, and analyzing.

Throughout this journey, we'll navigate the intricacies of implementing line sensing, feedback control, and accelerometer integration to ensure our robot successfully ascends a ramp, executes a turn at the top, and descends without veering off the edge.

Background

For this project, we will utilize Lingua Franca, the first reactor-oriented coordination language. It allows you to specify reactive components and compose them. Lingua Franca's semantics eliminate race conditions by construction and provide a sophisticated model of time, including a clear and precise notion of simultaneity. The blog will explain the concepts sufficiently for you to comprehend the program, even if you're not familiar with the language.

To kickstart our project, we'll fork lf-3pi-template, which comes with pico-sdk, facilitating a quick start.

Understanding the Task at Hand

Our primary goal is to program the Pololu robot to perform a specific sequence: climb a ramp, detect the summit, turn around, and descend without falling off the edge. Key elements for this challenge include a ramp with a 15-degree slope, a flat turning surface at the top, a light-colored surface, and dark-colored edges for the robot to detect and avoid.

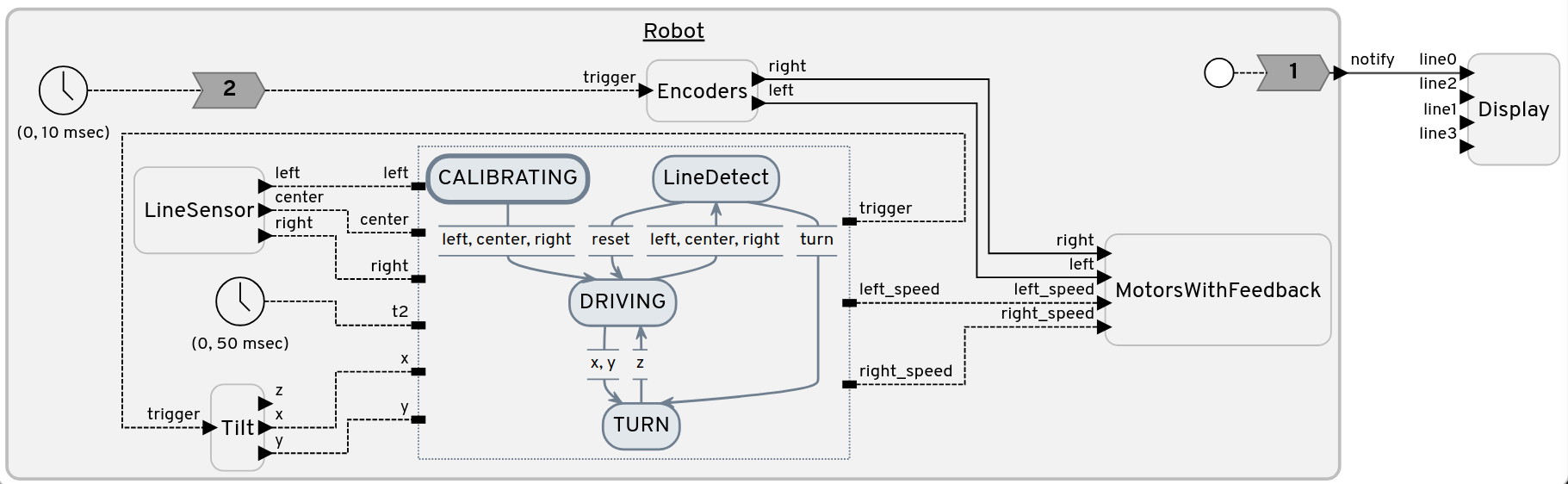

Modeling

Let's initiate our project by modeling it with a finite-state machine and outlining the sensors we'll use.

Sensors Utilized

Line Sensors

For detecting edges and preventing the robot from falling off the wedge. Additionally, the accelerometer will provide roll, yaw, and pitch values, while the gyroscope calculates the angular velocity for precise turns.

Encoders

Encoders play a crucial role in sustaining a consistent speed during both ascents and descents on the hill, effectively powering the wheels in accordance with the terrain.

Accelerometer

Implement feedback control to adjust individual wheel speed periodically, maintaining a near-zero roll measurement. We will leverage the Motors With Feedback reactor to compensate for roll-induced variations in motor power.

Gyroscope

Utilize the gyroscope to calculate angular displacement for accurate turning of the bot.

(Note: For more details about the implementation of the program for sensors, refer to the src directory)

Finite State Machine

Our journey begins in a calibrating state, where we set the line sensor threshold. After a 5-second calibration, we transition to the driving state. Subsequently, the robot defaults to moving forward.

While moving forward, the robot may encounter the dark line or reach the end of the wedge. Upon detection, the robot saves the data, tracks back a few centimeters, and enters the LineDetect state. Decisions in this state are based on the saved data, leading to a 20-degree turn in the opposite direction of the detected line or a 180-degree turn if the wedge's end is detected.

Preparing for the Climb

Before delving into the implementation, it's crucial to outline the key requirements for our robot program:

- Ascend and descend a ramp with a 15-degree slope.

- Detect dark-colored edges of the ramp using line sensors.

- Implement feedback motor control using accelerometer and encoders to adjust wheel speeds based on roll and encoder readings.

- Perfom precise turns using gyroscope measurements.

Designing/Implementation

target C {

platform: {

name: "rp2040",

board: "pololu_3pi_2040_robot"

},

threading: false,

}

import Display from "lib/Display.lf"

import GyroAngle from "lib/IMU.lf"

import LineSensor from "HillLineDetectSolution.lf"

import Tilt from "lib/Tilt.lf"

import Encoders from "lib/Encoders.lf"

import MotorsWithFeedback from "lib/MotorsWithFeedback.lf";

reactor Robot {

output notify:string // Notify of mode change.

state turn_angle:int

state turn_direction:int //+1 for left direciton, -1 for right direction

state lineOnLeft:bool

state lineOnCenter:bool

state lineOnRight:bool

state takenUTurn:bool=false

lineSensor = new LineSensor()

motors = new MotorsWithFeedback();

encoder = new Encoders();

encoder.right -> motors.right;

encoder.left -> motors.left;

tilt = new Tilt()

timer t(0, 10 ms)

timer t2(0, 50 ms)

reaction(startup) -> notify {=

lf_set(notify, "INIT:CALIBRATING");

=}

reaction(t) -> encoder.trigger {=

lf_set(encoder.trigger, true);

=}

initial mode CALIBRATING

{

reaction(lineSensor.left, lineSensor.center, lineSensor.right) -> reset(DRIVING), notify {=

lf_set_mode(DRIVING);

lf_set(notify, "DRIVING");

=}

}

mode DRIVING {

reaction(reset) -> motors.left_speed, motors.right_speed {=

lf_set(motors.left_speed, 0.2); //0.2 m/s

lf_set(motors.right_speed, 0.2);

=}

reaction(t2) -> tilt.trigger{=

lf_set(tilt.trigger, true);

=}

reaction(tilt.x, tilt.y) -> motors.left_speed, motors.right_speed, reset(TURN), notify {=

if(tilt.x->value <= 0 && !self->takenUTurn)//considering we have reached the top, then perfom U-turn

{

self->turn_angle = 180;

self->turn_direction = 1;//direction doesn't matter

self->takenUTurn = true;

lf_set_mode(TURN);

lf_set(notify, "TURN");

}

lf_set(motors.left_speed, 0.2 * (1.0 - 0.05 * tilt.y->value * ((tilt.x->value >= 0) ? 1.0 : -1.0)));

lf_set(motors.right_speed, 0.2 * (1.0 + 0.05 * tilt.y->value * ((tilt.x->value >= 0) ? 1.0 : -1.0)));

=}

reaction(lineSensor.left, lineSensor.center, lineSensor.right) -> reset(LineDetect), notify {=

self->lineOnLeft = lineSensor.left->value;

self->lineOnCenter = lineSensor.center->value;

self->lineOnRight = lineSensor.right->value;

if(self->lineOnLeft|| self->lineOnCenter || self->lineOnCenter)

{

lf_set_mode(LineDetect);

lf_set(notify, "LineDetect");

}

=}

}

mode LineDetect {

logical action turn

reaction(reset) -> motors.left_speed, motors.right_speed, turn, reset(DRIVING), notify {=

lf_set(motors.left_speed, -0.2);

lf_set(motors.right_speed, -0.2);

if(self->lineOnCenter)

{

self->turn_angle = 180;

self->turn_direction = 1;//direction doesn't matter

}

else if(self->lineOnLeft)

{

self->turn_angle = 20;

self->turn_direction = -1; //right

}

else if (self->lineOnRight)

{

self->turn_angle = 20;

self->turn_direction = 1; //right

}

else

{

//no line detected

lf_set_mode(DRIVING);

lf_set(notify, "DRIVING");

}

lf_schedule(turn, SEC(0.4));

=}

reaction(turn) -> reset(TURN), notify{=

lf_set_mode(TURN);

lf_set(notify, "TURN");

=}

}

mode TURN {

gyro = new GyroAngle()

reaction(reset) -> motors.left_speed, motors.right_speed {=

lf_set(motors.left_speed, self->turn_direction * -0.2);

lf_set(motors.right_speed,self->turn_direction * 0.2);

=}

reaction(t) -> gyro.trigger {=

lf_set(gyro.trigger, true);

=}

reaction(gyro.z) -> reset(DRIVING), notify{=

if(abs(gyro.z->value) > self->turn_angle)

{ lf_set_mode(DRIVING);

lf_set(notify, "DRIVING");

}

=}

}

}

main reactor {

robot = new Robot()

display = new Display()

robot.notify -> display.line0;

}

The primary component in the above Lingua Franca program is a reactor, functioning like an object to some extent. The reactor can have multiple states, and it provides mechanisms to transition between states based on time or physical/logical actions. It also has interfaces to input and output the data.

Here, in the program, we have two reactors:

- Robot

- Display

A Robot reactor has a total of 4 states:

- CALIBRATING

- DRIVING

- LINEDETECT

- TURN

The Robot reactor operates exclusively in one of its states at any given time. According to the design, the robot defaults to the CALIBRATING state and then shifts to DRIVING after completing the calibration process. The robot remains in the DRIVING state until it encounters obstacles. Upon detecting obstacles, it retraces a few centimeters, transitioning to the LINEDETECT state. In the LINEDETECT state, a decision is made to navigate around the obstacle. After deciding, it proceeds to the TURN state, determining the number of degrees to turn (let's say x degrees) and the direction (clockwise/anti-clockwise) based on information from LINEDETECT during exit.

Once the TURN state executes actions in line with the decision from LINEDETECT, control shifts back to the DRIVING state, with its default behavior of moving forward. Following the initial CALIBRATING state, the robot continually cycles through the DRIVING, LINEDETECT, TURN states to fulfill its designated task.

Finally, the Display reactor receives input from the Robot reactor to showcase obstacle detection, indicating positions such as left, right, and middle.

Analysis

The robot effectively accomplishes its assigned mission, skillfully steering clear of the dark-colored edges. Moreover, the feedback control adeptly mitigates variations in roll, ensuring a consistent speed both uphill and downhill. Once aligned with the ramp, the robot seamlessly follows a straight path, courtesy of the precise adjustments made by the feedback control system. In instances where the robot starts its task atop the dark-colored edges, it promptly detects the situation and makes informed decisions to evade potential issues.

Conclusion

This project seamlessly combines sensor integration, feedback control, and real-world navigation, offering valuable insights into the intricate challenges of robotics and a practical way to apply the concepts learned from embedded systems courses, including Designing, Modeling, and Analysis. Source code can be found at Github. Stay tuned for more exciting technical explorations in our upcoming blogs!

References

- Edward A. Lee and Sanjit A. Seshia, Introduction to Embedded Systems, A Cyber-Physical Systems Approach Second Edition, MIT Press, ISBN 978-0-262-53381-2, 2017.

- Lingua Franca